This post outlines a methodology to expose RESTful services for some of the common tasks of Lombardi like, process initiation and adding comments to a process instance taking them as examples. Any API call from Lombardi which needs to be exposed as REST should be exposed as a SOAP Webservice, since Lombardi does not provide the capability to directly expose the API calls as RESTful service. If a BPD needs to be invoked, a general system service needs to be exposed as SOAP Webservice. The actual instantiation of the process should happen with the general system service.

For example, a BPD can be invoked using the following command, embedded in a Server script activity of general system service.

// to start a process

var processInstance = tw.system.startProcessByName(processName, InputParameterMap);

// to add comment to a process instance

var processInstance = tw.system.findProcessInstanceByID(processId);

processInstance.addComment("Hello!");

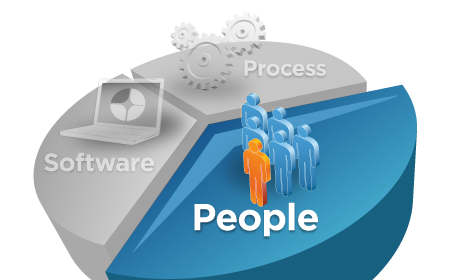

Lombardi provides a Webservice implementation component which can be used to expose general system service as SOAP Webservices. A stand alone service layer built on Spring3 framework would consume the SOAP Webservices and convert them to RESTful services using Spring3 REST support. Stand alone services can decouple the transformation logic from Lombardi. Also, deploying them as stand alone would mean that Lombardi product code is not tinkered and hence not endangering future product patches and also this helps fully leveraging Lombardi warranty support.

Below are the examples of code snippets of Spring controller which calls a SOAP Webservice (Lombardi API exposed as SOAP Webservice) and exposes the method as RESTful service which would emit XML or JSON.

// controller method to invoke a process

@RequestMapping(method = RequestMethod.GET, value = "/startCalcGDPProcess",

headers = "Accept=application/xml, application/json")

public @ResponseBody

String startCalcGDP() throws Exception {

RunGDPPortTypeProxy proxy = new RunGDPPortTypeProxy();

String pi = proxy.startGDP();

return pi;

}

// controller method to add a comment to a process instance

@RequestMapping(method = RequestMethod.POST, value = "/addCommentToGDP",

headers = "Accept=application/xml, application/json")

public ModelAndView addCommentToGDPProcess(@RequestBody String body) {

Source source = new StreamSource(new StringReader(body));

ProcessInstance e = (ProcessInstance) jaxb2Mashaller.unmarshal(source);

addCommentToGDPProcess.add(e);

return new ModelAndView(XML_VIEW_NAME, "object", e);

}

A sample Spring servlet configuration for REST based services.

<!-- To enable @RequestMapping process on type level and method level -->

<bean

class="org.springframework.web.servlet.mvc.annotation.DefaultAnnotationHandlerMapping" />

<bean

class="org.springframework.web.servlet.mvc.annotation.AnnotationMethodHandlerAdapter">

<property name="messageConverters">

<list>

<ref bean="marshallingConverter" />

<ref bean="atomConverter" />

<ref bean="jsonConverter" />

</list>

</property>

</bean>

<!-- Client -->

<bean id="restTemplate" class="org.springframework.web.client.RestTemplate">

<property name="messageConverters">

<list>

<ref bean="marshallingConverter" />

<ref bean="atomConverter" />

<ref bean="jsonConverter" />

</list>

</property>

</bean>

<bean id="jaxbMarshaller" class="org.springframework.oxm.jaxb.Jaxb2Marshaller">

<property name="classesToBeBound">

<list>

<value>dw.spring3.rest.bean.ProcessInstance</value>

</list>

</property>

</bean>

<bean id="lombardiRestAPI"

class="org.springframework.web.servlet.view.xml.MarshallingView">

<constructor-arg ref="jaxbMarshaller" />

</bean>

<bean

class="org.springframework.web.servlet.view.ContentNegotiatingViewResolver">

<property name="mediaTypes">

<map>

<entry key="xml" value="application/xml" />

<entry key="html" value="text/html" />

</map>

</property>

<property name="viewResolvers">

<list>

<bean class="org.springframework.web.servlet.view.BeanNameViewResolver" />

<bean id="viewResolver"

class="org.springframework.web.servlet.view.UrlBasedViewResolver">

<property name="viewClass"

value="org.springframework.web.servlet.view.JstlView" />

<property name="prefix" value="/WEB-INF/jsp/" />

<property name="suffix" value=".jsp" />

</bean>

</list>

</property>

</bean>

<bean id="marshallingConverter"

class="org.springframework.http.converter.xml.MarshallingHttpMessageConverter">

<constructor-arg ref="jaxbMarshaller" />

<property name="supportedMediaTypes" value="application/xml" />

</bean>

<bean id="atomConverter"

class="org.springframework.http.converter.feed.AtomFeedHttpMessageConverter">

<property name="supportedMediaTypes" value="application/atom+xml" />

</bean>

<bean id="jsonConverter"

class="org.springframework.http.converter.json.MappingJacksonHttpMessageConverter">

<property name="supportedMediaTypes" value="application/json" />

</bean>

This approach might sound little bit awkward for having a RESTful service invoking a SOAP Webservice, however this methodology effectively decouples the Lombardi API calls from generating RESTful services.